When the crawling grows, there arises the problem with limits, parallel requests, languages, speed, and cost. Here comes the ScrapingBee Concurrency that allows you to respect the crawling limits, speed up the data extraction, reduce the errors, and enables you to choose the right pool size.

This guide provides the simple steps that are easy to copy and imitate on your dashboards. Step-by-step explanations make it clear enough to learn easily about the ScrapingBee Concurrency implementation in different language scenarios like Python, Node.js, and Go. By the end, you would become a professional who knows enough about the crawling limits, monitoring techniques, runs small tests, measures results, and scales with confidence.

What Concurrency Means In Real Crawls

Concurrency means how many requests run at the same time. You make a worker pool and set a size. Each worker handles one request at a time. When a worker finishes, it takes the next URL.

ScrapingBee Concurrency shifts with your code, page delay, JavaScript work, and retry rules. A big number is not always best. The right number gives steady speed, few errors, and a fair price per page. You also need to respect the ScrapingBee concurrent requests limit that your plan allows.

Why Not Just Max It Out

Some teams push the highest number. At first, it may look fast. Soon, the target site may push back and send 429 or 5xx errors. Every bad try wastes credits and time, so use a ScrapingBee Premium Proxy only for hard pages and not to force unsafe speed. A smaller, steady flow often wins. When you see many 429s, remember it maps to the scrapingbee rate limit 429, so slow down and try again later.

A Simple Sizing Method You Can Trust

Finding the right concurrency needs tests, not guesses. Begin with a small pool, then raise it slowly. Watch how latency, errors, and credits behave over a full run. Keep notes so anyone can repeat the steps later.

- Median Latency: Track the middle response time. If more workers do not lower this time, extra work in parallel does not help.

- Error Rate: Watch for 429s and 5xx. When these rise, you have pushed too far.

- Credits Per Success: Check the cost per good page. Retries, rendering, and proxies can hide rising spend.

Step back a little from the point where errors grow or time stops improving. Save this as your ceiling. Write down the test and the result. After any change in code or servers, run the same test again. If your plan tier changes, check the new caps against the scrapingbee pricing concurrency tiers to keep numbers correct.

Handling Target Variability

Not every site likes the same pace. News pages, search pages, and API routes act differently. Set a cap per domain. Keep one queue for each domain with its own limit. A global cap still helps, but domain caps stop one loud host from taking all workers.

Language Patterns That Work

Every stack has a tool to keep limits, line up tasks, and retry with care. The pattern is simple. Hold a cap, keep a queue, and slow down when needed. For quick checks and small tests, ScrapingBee Chrome extensions help you capture request data and copy working headers into your code.

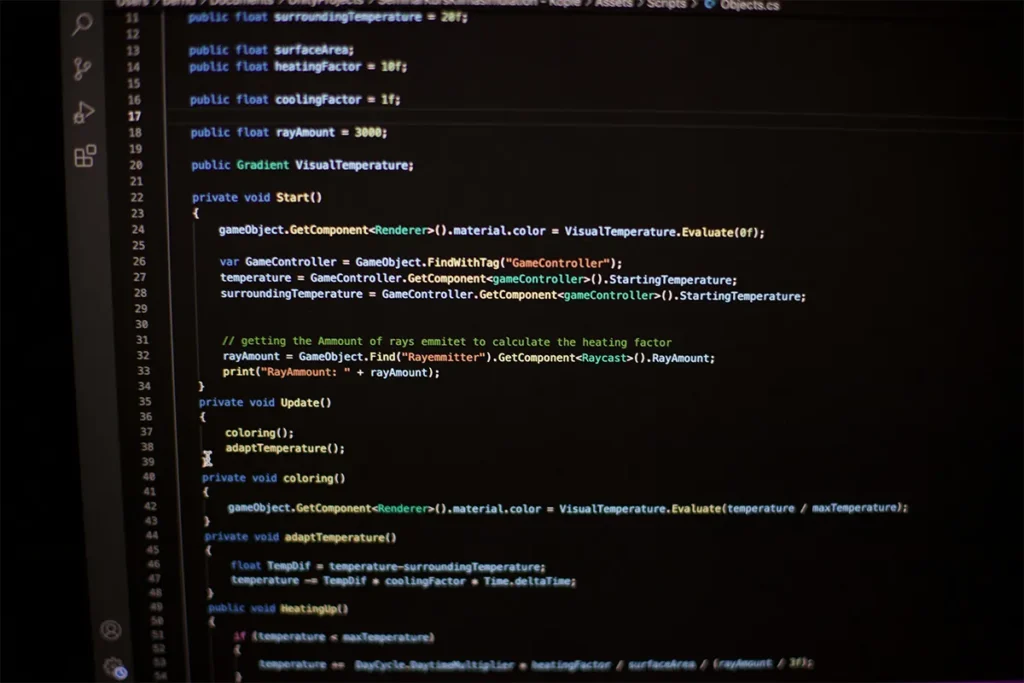

Python: Asyncio With Semaphores:

Use an asyncio Semaphore to hold the maximum in-flight tasks. Wrap the HTTP call with a retry that waits longer after each failure. This keeps the loop busy without too many open tasks. When you search for examples, you can look up scrapingbee python asyncio concurrency to match this shape.

Node.js: Promise Pooling:

Choose a pool helper like p-limit. It holds a fixed number of active promises. After a failure, call a retry with backoff and jitter. Memory stays stable, and flow stays smooth. For quick starts, many teams search for the scrapingbee node concurrency p-limit and follow that approach.

Go: Worker Pools:

Start a set number of workers that read from a channel. That number is your pool size. Each worker uses context timeouts and a backoff loop. Results go to a result channel for clean counting. If you need a name for this shape, think scrapingbee go worker pool.

The Role Of Retries, Backoff, And Jitter

Retries are part of scraping. Networks drop. Proxies blink. Servers have bad minutes. Use exponential backoff with jitter. Each retry waits longer and adds a small random delay. Keep retry counts low. Treat many 429s as a sign to slow down. A simple plan that people copy is the scrapingbee retry backoff strategy, which keeps tries small and calm.

When A 429 Is Helpful

A 429 says you hit a limit. This is good feedback. Mark that domain as busy. Slow that queue and try again with backoff. If 429s keep coming, lower the cap more and widen the wait. These steps follow the idea behind the scrapingbee rate limit 429, so your runs stay polite.

Credits, Rendering, And Per-Page Economics

Concurrency connects to cost. JavaScript rendering and premium proxies make each page slower and pricier. Render only when a ScrapingBee JS scenario needs it. When you use special routes or residential IPs, credit use may change. Build small tests and check the budget before you scale. If you tune this part, read about scrapingbee JavaScript rendering concurrency and scrapingbee proxy premium credits to plan fair spend.

Plan Tiers And Practical Impact

Your plan may set a top cap for concurrent requests. Your safe number can be lower than the plan. Note the proven cap beside the plan cap. Record any extra cost for a ScrapingBee Post Request if your plan counts it differently from GET. Keep a small chart that maps domain type to a safe value. This stops mistakes and helps new teammates learn fast. If you upgrade or downgrade, confirm limits with scrapingbee pricing concurrency tiers before you push new runs.

Queue Design That Prevents Chaos

A stable queue turns parallel work into a calm flow. Give the queue simple rules so one loud domain cannot break the run. With clear limits and safety valves, operators make quick and safe moves. The parts below keep the pool healthy.

- Domain Buckets: Use one queue and one cap per domain to stop one host from taking all workers.

- Priority Queues: Mark urgent jobs so they move first, yet do not block normal work forever.

- Dead Letter Queue: Send repeated failures to a side queue so the main flow stays clean.

- Circuit Breaker: Pause a domain after many 5xx or 429s to let it cool down.

Run health checks often to be sure these tools still work. Push fake spikes and forced errors in staging to see calm slowdowns, not crashes. Write steps in a runbook with ScrapingBee extract rules so on-call staff can act fast.. With these parts in place, sharp changes to targets will not cause chaos. These steps match scrapingbee queue best practices that many teams follow.

A Reproducible Benchmark You Can Run

You can test without a heavy setup. Pick public pages that allow polite scraping and look-alikes. Choose 200 to 500 URLs. Try pool sizes like 5, 10, 20, and 40. Log good pages, error codes, median time, and credits used. Show a small table and a line chart. Repeat the same test after any big change.

Observability That Guides Decisions

Good data cuts guesswork. Log domain, method, status, time to first byte, total time, retry count, whether you used JavaScript rendering, and the ScrapingBee country codes used. Add alerts for error spikes and time jumps. Tie alerts to auto throttling. If 429s pass a set line, lower the cap for that domain for a short time and send a message.

Dynamic Concurrency Control

Static caps are simple. Dynamic caps can be better. Adjust the domain cap in small steps. If success stays high and time stays steady, raise the cap a little. If 429s or 5xx rise, cut the cap by a bit more. This soft rule stops jumpy swings. Log each change with the reason.

Common Failure Modes And How To Fix Them

Before we fix issues, it helps to name common shapes. Many problems repeat across teams and tools. Once you see the shape, you can pick the right fix fast. The examples below show the most common ones and how to handle them.

- Spiky Errors After A New Release: Roll back to the last version, compare library changes, and run your benchmark again. New HTTP clients, TLS moves, or parser tweaks can change timing in quiet ways.

- Fast Start Then Collapse: Many errors after a brief burst point to overshoot. Lower the domain cap. Add jitter so requests do not land at the same time.

- Per Domain Starvation: One domain eats the pool and slows others. Enforce domain buckets and fair scheduling. Weighted fair queues fix this fast.

- Render Everywhere Syndrome: Rendering is slow and costly. Detect when a page truly needs it. Try a two-step plan. First fetch without render, then render only if needed.

Workflow Examples By Language

Different stacks take different paths to the same goal. These small blueprints show safe limits, clean retries, and calm shutdowns. Use them as a start, then fit them to your style. Keep the control points the same.

- Python: Create a Semaphore(pool_size) and an asyncio queue. Add a retry decorator with backoff and jitter. Workers acquire the semaphore, make the request, and release in a finally block.

- Node.js: Return a promise from the request function. Use p-limit to hold a fixed number of active promises. On failure, call a retry helper with backoff and jitter. Keep a small metrics object for counts and times.

- Go: Make a buffered jobs channel. Launch n workers where n is the pool size. Each worker uses context timeouts and a backoff loop. Send results to a result channel and use a WaitGroup to close cleanly.

Governance And Documentation

Teams work best with simple rules on paper. Save your chosen caps in the repo. Link each cap to a benchmark run. List the error lines that trigger throttling, and keep your ScrapingBee Pagination rules with them. Add a short runbook for lowering caps during a spike. These notes keep launches calm.

Final Checks Before Production

Production checks stop small slips from causing long-term pain. Review network, retries, and shutdowns before a scale-up. Walk through the list with a partner. Try failure drills to confirm safety tools work. This shared step builds trust and smooth launches.

- Dual Timeouts: Set timeouts in the HTTP client and in the job runner so stuck sockets cannot block workers.

- Smart Retries: Skip 4xx client errors, then use exponential backoff with jitter for short-term problems.

- Explicit Network Settings: Make proxy and rendering choices clear on each request, not hidden in defaults.

- Durable Queues: Keep the queue safe across crashes and restarts so work is not lost.

- Graceful Shutdowns: Drain active jobs, close channels, and free resources to avoid a bad state during deploys.

After this, look at logs and alerts one more time in staging. Check that dashboards show the right fields, alerts go to the right room, and throttling switches are easy to find. A short drill now removes drama later. Your first big batch should feel routine.

Table: ScrapingBee Concurrency

| Topic | What To Set | Watch | If This Happens | Do This |

|---|---|---|---|---|

| Pool Size | Start 5 → 10 → 20 → 40 | Middle response time | Time stops getting better | Step back one level |

| Rate Limits | One cap per site | 429 count | Many 429 responses | Lower the cap and add backoff |

| Python | Asyncio with semaphore | In flight tasks | Event loop overload | Tighten the pool and retry |

| Node.js | Promise pool | Active tasks | Memory climbs | Lower the pool and add jitter |

| Go | Fixed workers and channels | Queue depth | Jobs pile up | Resize workers and use backoff |

| Retries | Backoff with jitter | Retry count | Many repeats | Cut tries and slow the pool |

| Rendering | Only when needed | Page time and credits | Slow pages, high cost | Skip rendering by default |

| Proxies | Match route to task | Credit spend | Spend spikes | Switch route and retest |

| Plan Tiers | Check caps for each plan | Plan cap versus tested cap | Mismatch found | Align to the lower limit |

| Queues | Buckets per site | Starvation and spikes | One site hogs | Use fair scheduling and a breaker |

Conclusion

ScrapingBee Concurrency becomes simple when you treat it like a steady pipe. Choose a pool, shape traffic per domain, and use kind retries that fit your budget and the target rules. Small, repeatable tests show the curve where more workers stop helping and start hurting. For neutral guidance on rate limits and Retry-After behavior, see HTTP 429 Too Many Requests. With clear dashboards and short runbooks, your crawler stays stable even when sites act in new ways.

When you use these habits together, batches finish on time, credits stay safe, and your footprint stays polite. Teams can grow with confidence because concurrency becomes a measured setting, not a guess. New engineers will learn fast, and leaders will see smooth delivery without late fire drills or surprise spending.